I remember the first time a Google core update hit one of my sites: traffic fell, my inbox filled with worried client messages, and for a few sleep-deprived days I felt like I was firefighting without a map. Over the years I’ve learned that the best way out of that panic is methodical diagnosis using the signals available in Google Search Console (GSC), combined with targeted content and technical fixes. Below I’ll walk you through how I use Search Console to decode what happened and build a recovery plan that actually works.

Confirm the timing and scope

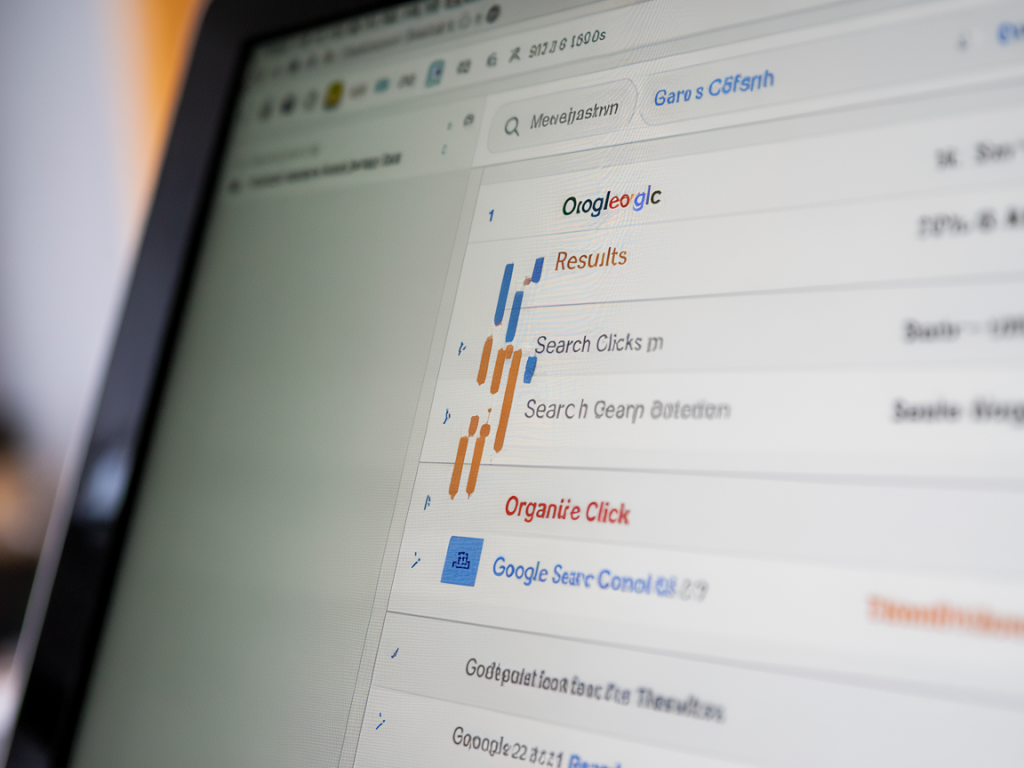

The very first thing I do is confirm that the traffic drop aligns with the core update date. In GSC I open Performance and set the date range to the week before and the week after the update. If impressions and clicks drop sharply on the update date, that’s a strong signal the update affected search visibility.

- Tip: Toggle between "Total clicks", "Total impressions", and "Average CTR" to see which metric moved most — drops in impressions suggest ranking losses, drops in CTR can point to presentation issues (meta titles/descriptions).

- Tip: Compare the same date ranges year-over-year to exclude seasonality.

Segment by query, page, device and country

Next I slice the data to find where the drop is concentrated.

- Queries: I filter to the top losing queries. Are they transactional queries, branded queries, or informational long tails? If branded queries dropped, something unusual is going on (brand reputation, indexation issues). If informational content dropped, it’s likely related to content quality or relevance.

- Pages: I filter by pages to identify which templates or sections of the site took the hit. Often I find the damage concentrated in a category template or a group of thin content pages.

- Devices & Countries: I check whether mobile or desktop lost more — a mobile-only drop could point at Core Web Vitals or mobile rendering issues. Geography segmentation can reveal algorithm tweaks affecting particular locales.

Look for patterns in dropped pages

Once I have a list of losing pages, I run a quick qualitative review. I ask myself:

- Are these pages thin or highly templated (e.g., faceted category pages)?

- Are they primarily user-generated content with low moderation?

- Do they have outdated or unhelpful information?

- Are they duplicate or near-duplicate variations across the site?

It’s surprising how often a core update simply penalizes a whole content type rather than individual pages — for example, dozens of “how-to” pages written at low depth or hundreds of product pages auto-generated from weak templates.

Cross-check with Index Coverage and URL Inspection

GSC’s Index Coverage and URL Inspection are critical.

- In Index Coverage I look for newly discovered indexing errors or a surge in "Discovered – currently not indexed" statuses that began around the update. This can indicate crawling budget or other indexing problems.

- For representative losing pages I run the URL Inspection to confirm they are indexed, discover when Google last crawled them, and check the rendered HTML. If Google’s rendered view is missing key content (e.g., JS-rendered content that fails), that’s a direct technical cause.

Check Manual Actions, Security Issues and Sitemaps

It’s rare but essential: I always make sure there are no manual actions or security warnings in GSC. A manual action will show up in Security & Manual Actions and requires a very different process (remediation + reconsideration request).

I also verify the sitemap is up-to-date and being read by Google. Missing or stale sitemaps can delay re-discovery of improved pages.

Review Core Web Vitals and Mobile Usability

After many updates, Google has increasingly factored page experience. In GSC’s Core Web Vitals report I check which URLs have failing metrics — LCP, FID or CLS. A site-wide LCP regression after an update could amplify ranking declines, especially on mobile.

- If I find problems, I prioritize fixes like optimizing server response, compressing images, deferring non-critical JS, and improving font loading. Tools I use: Lighthouse, PageSpeed Insights, and Chrome UX Report.

Audit content quality and E-E-A-T signals

Core updates often target low-value or untrustworthy content. For the pages that lost visibility I evaluate:

- Depth and usefulness of the content: Can I answer the user’s intent better? Is there unique research, examples, visuals?

- Authoritativeness and trust: Do pages have clear author bios, references, and supporting citations? For transactional pages, are policies and trust signals visible?

- User experience: Are there intrusive ads, poor layout, or misleading CTAs?

When necessary, I rewrite, expand and add credible sources. I’ve found that adding author bios and date-stamped updates with transparent sources often helps on YMYL or advice content.

Use Search Console to test hypotheses

GSC supports iterative testing. After I make changes to a sample set of pages, I:

- Resubmit the updated URLs via URL Inspection to request indexing.

- Monitor performance for those URLs over 2–4 weeks using the Performance report filtered to the specific pages.

- Compare the trend against similar pages I did not change — this helps isolate whether recovery is due to improvements or broader algorithm shifts.

Technical checklist I run through

- Canonical tags: Ensure canonical points to the correct version and avoid self-conflicting canonicals.

- Robots.txt and meta robots: Confirm no accidental noindex directives.

- Structured data: Validate schema with Google’s Rich Results Test; structured data errors can reduce SERP features that drive clicks.

- Internal linking: Strengthen internal links to key pages to help Google understand their importance.

Prioritize fixes and measure impact

I prioritize by effort vs. impact. Quick wins include:

- Fixing critical CWV issues on high-traffic pages.

- Refreshing titles and meta descriptions to improve CTR (track CTR changes in GSC).

- Consolidating thin pages via 301 redirects or combining similar content.

For tracking I create a small table that I update weekly with key signals from GSC for high-priority pages:

| URL | Clicks (before) | Clicks (after) | Impressions | Last crawl | Action |

|---|---|---|---|---|---|

| /example-article | 1,200 | 480 | 8,000 | 2025-12-15 | Content rewrite + resubmit |

What recovery looks like and how long it takes

Recovery after a core update is rarely instantaneous. In my experience you may see partial improvements within a few weeks for small fixes (CTR tweaks, index resubmits) and 6–12 weeks for content and authority changes to reflect. Large sites or E-E-A-T-dependent niches can take longer.

Don’t expect a single silver-bullet change. The best recoveries are cumulative: technical stability, improved content quality, clearer authoritativeness signals, and ongoing monitoring in GSC.

Keep a log and communicate with stakeholders

I keep a change log with dates of fixes, the pages affected, and the GSC evidence (screenshots or exported CSV snippets). This serves two purposes: it helps analyze cause and effect, and it provides transparent communication to clients or leadership when results take time.

Finally, I test and iterate. If a change yields neutral impact, I try a different hypothesis. Search Console is my laboratory: it shows what users see in search and gives the performance signals I need to make data-driven recovery decisions.